Measuring the power draw of my PC and PS5

A few posts ago I showed how to measure power consumption with Shelly’s IoT device. In this post I’m going to share my measurement results regarding my PC and my Playstation 5. I measured the total power draw of these devices directly from the socket.

Let’s start with a PC, here is my configuration (yes it’s an anti-RGB config):

- MSI B450 Tomahawk MAX

- AMD Ryzen 5 3600 + Noctua NH-U12S chromax.black

- MSI GTX 1660Super GamingX

- Crucial Ballistix 2×8 GB CL16 3200MHz

- Crucial MX-500 500 GB + WD Blue SSD 1 TB

- 4X Noctua NF-S12B Redux-1200 PWM fan

- Fractal Design Mesify C case

- be quiet! Pure Power 11 500 W

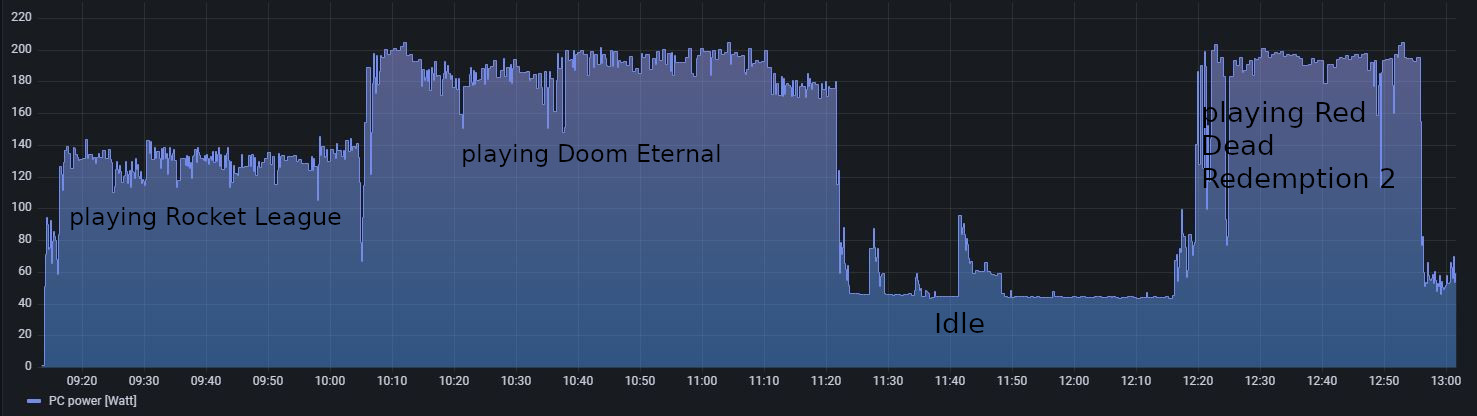

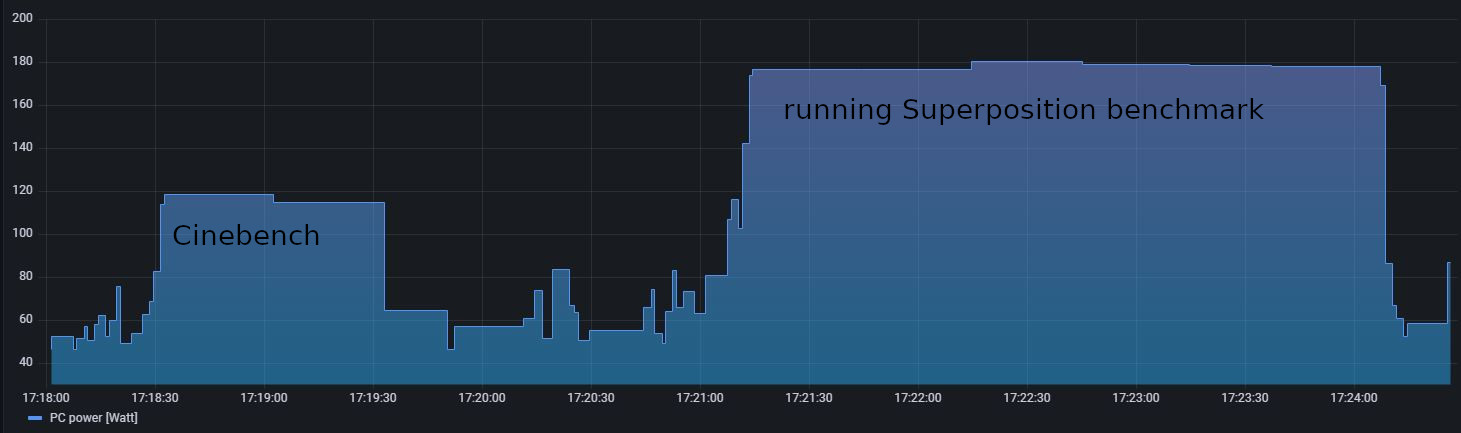

As you can see I played 3 different games, and measured the power draw in idle. Why is Rocket League different from the two others? The power draw playing Rocket League is less because I capped the framerate at 120 fps (I don’t need 400-500 fps in RL), so the GPU utilization wasn’t 100%. However when I was playing Doom Eternal and RDR2 there wasn’t frame a limit applied, so the GPU utilization was around 99% during the whole time. In these cases the system’s total power draw was approx. 200 Watts. So I don’t really need a 500 W power supply for this system without overclocking. Of course my system’s power draw can exceed 200 Watts if both CPU and GPU utilization go to 100%, but that’s usually not the case during gaming. Generally the GPU defines the power consumption. Lastly I ran some benchmarks as well, here is the result. (I should have run AIDA64 for stressing both the CPU and GPU, that’s my mistake).

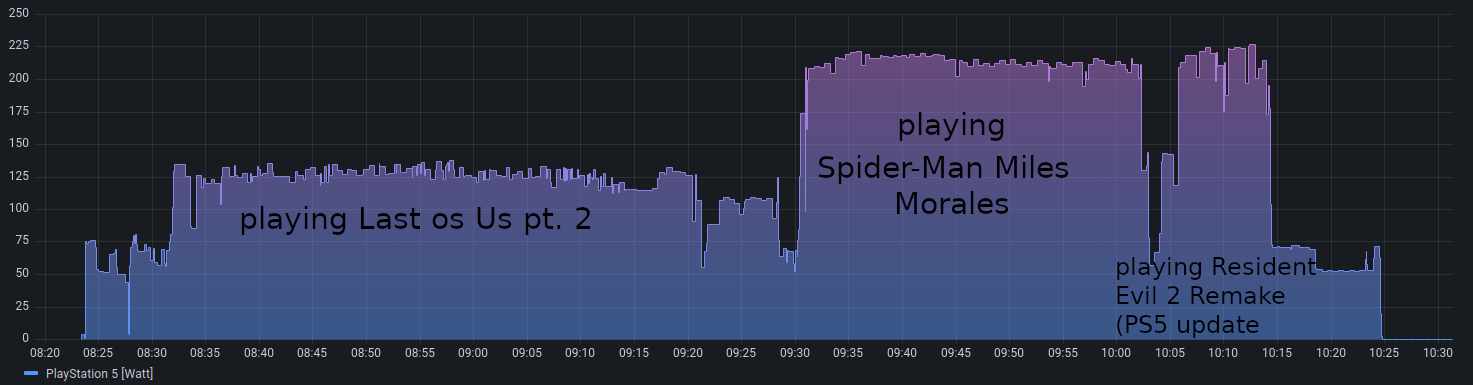

I also measured the power consumption of my Playstation 5 (disk version), you can see the results below:

I presume that the GPU utilization was around 99% when I was playing Spider-Man and RE2 Remake (with the PS5 patch), because I played both games in VRR mode (I have a 120 Hz VVR display), and the framerate didn’t reach 120 fps in either case. While playing Last of Us part 2 the framerate was limited to 60 fps.