BGP Route Reflector Design Options (Part 2): Hierarchical 2-tier Route Reflectors, MCIDs

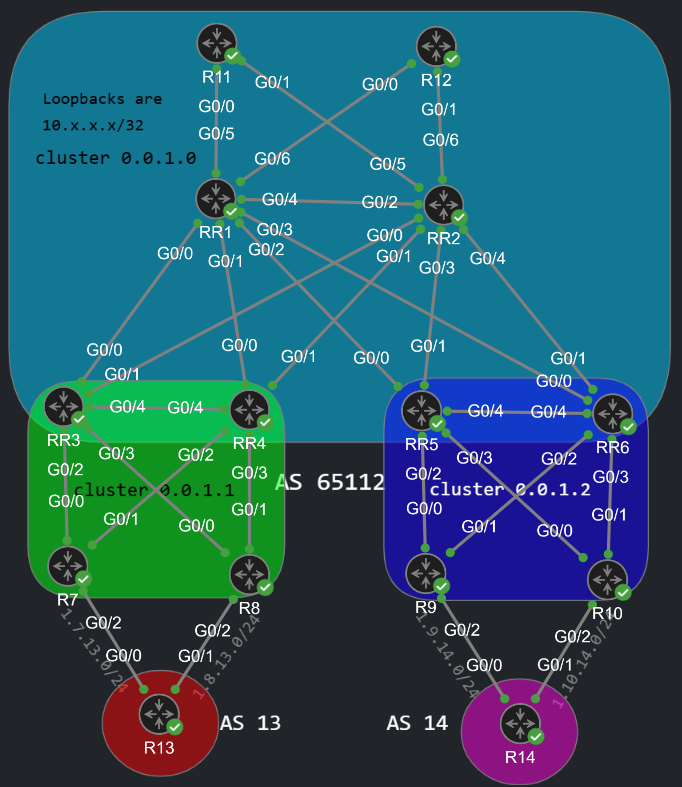

The issue of the previous Route Reflector (RR) design is that it is not protected from a single point of failure: if a RR goes down it can cause traffic black holes. This time I tried to build a more redundant topology with hierarchical RR design.

Having 6 Reflectors for 10 iBGP routers is probably overkill for a real-world environment. But it's a great way to learn how RRs and clustering work. We have two tier-1 RRs: RR1 and RR2. They both have 6 clients: RR3, RR4, RR5, RR6, R11 and R12. They are configured as iBGP peers of each other, but they are non-clients of each other. I've read a few Cisco design guides online and it's actually recommended to have a full-mesh peering between the 1-tier RRs. In this case we only have two, so they are non-clients of each other. Here is the configuration of RR1:

RR1#show run | sec bgprouter bgp 65112 bgp cluster-id 0.0.1.0 bgp log-neighbor-changes neighbor 10.2.2.2 remote-as 65112 neighbor 10.2.2.2 update-source Loopback0 neighbor 10.3.3.3 remote-as 65112 neighbor 10.3.3.3 update-source Loopback0 neighbor 10.4.4.4 remote-as 65112 neighbor 10.4.4.4 update-source Loopback0 neighbor 10.5.5.5 remote-as 65112 neighbor 10.5.5.5 update-source Loopback0 neighbor 10.6.6.6 remote-as 65112 neighbor 10.6.6.6 update-source Loopback0 neighbor 10.11.11.11 remote-as 65112 neighbor 10.11.11.11 update-source Loopback0 neighbor 10.12.12.12 remote-as 65112 neighbor 10.12.12.12 update-source Loopback0 ! address-family ipv4 neighbor 10.2.2.2 activate neighbor 10.3.3.3 activate neighbor 10.3.3.3 route-reflector-client neighbor 10.4.4.4 activate neighbor 10.4.4.4 route-reflector-client neighbor 10.5.5.5 activate neighbor 10.5.5.5 route-reflector-client neighbor 10.6.6.6 activate neighbor 10.6.6.6 route-reflector-client neighbor 10.11.11.11 activate neighbor 10.11.11.11 route-reflector-client neighbor 10.12.12.12 activate neighbor 10.12.12.12 route-reflector-client exit-address-family

The configuration of RR2 is exactly the same except that we peer with 10.1.1.1 instead of 10.2.2.2. The 2-tier Reflectors (RR3, RR4, RR5 and RR6) are both RR-clients and RR for their respective cluster. Here is the configuration of RR3 and RR4, they are exactly the same:

RR3#show run | sec bgprouter bgp 65112 bgp cluster-id 0.0.1.1 bgp log-neighbor-changes neighbor 10.1.1.1 remote-as 65112 neighbor 10.1.1.1 update-source Loopback0 neighbor 10.2.2.2 remote-as 65112 neighbor 10.2.2.2 update-source Loopback0 neighbor 10.7.7.7 remote-as 65112 neighbor 10.7.7.7 update-source Loopback0 neighbor 10.8.8.8 remote-as 65112 neighbor 10.8.8.8 update-source Loopback0 ! address-family ipv4 neighbor 10.1.1.1 activate neighbor 10.2.2.2 activate neighbor 10.7.7.7 activate neighbor 10.7.7.7 route-reflector-client neighbor 10.8.8.8 activate neighbor 10.8.8.8 route-reflector-client exit-address-family

In this example the 2-tier RRs (RR3-RR4 and RR5-RR6) don't peer with each other. Technically they could peer with each other in a full-mesh like on the 1-tier, moreover we can configure them as clients of each other. We lose some redundancy but the CPU won't suffer that much because of the route replication overhead. The configuration of RR5 and RR6 are exactly the same and basically the mirror image of RR3 and RR4:

RR5#show run | sec bgprouter bgp 65112 bgp cluster-id 0.0.1.2 bgp log-neighbor-changes neighbor 10.1.1.1 remote-as 65112 neighbor 10.1.1.1 update-source Loopback0 neighbor 10.2.2.2 remote-as 65112 neighbor 10.2.2.2 update-source Loopback0 neighbor 10.9.9.9 remote-as 65112 neighbor 10.9.9.9 update-source Loopback0 neighbor 10.10.10.10 remote-as 65112 neighbor 10.10.10.10 update-source Loopback0 ! address-family ipv4 neighbor 10.1.1.1 activate neighbor 10.2.2.2 activate neighbor 10.9.9.9 activate neighbor 10.9.9.9 route-reflector-client neighbor 10.10.10.10 activate neighbor 10.10.10.10 route-reflector-client exit-address-family

The cluser-id is of course only configured on the RRs themselves, they are not configured on the clients. For the 2-tier RRs (RR3-RR6) they have the cluster-id configured for their own cluster, they are not aware that they are also RR-clients of the cluster 0.0.1.0. And now let's take a look how an NLRI propagates between the RRs if I advertise a prefix in AS14:

R14(config)#router bgp 14R14(config-router)#address-family ipv4 unicastR14(config-router-af)#network 14.14.14.14 mask 255.255.255.255

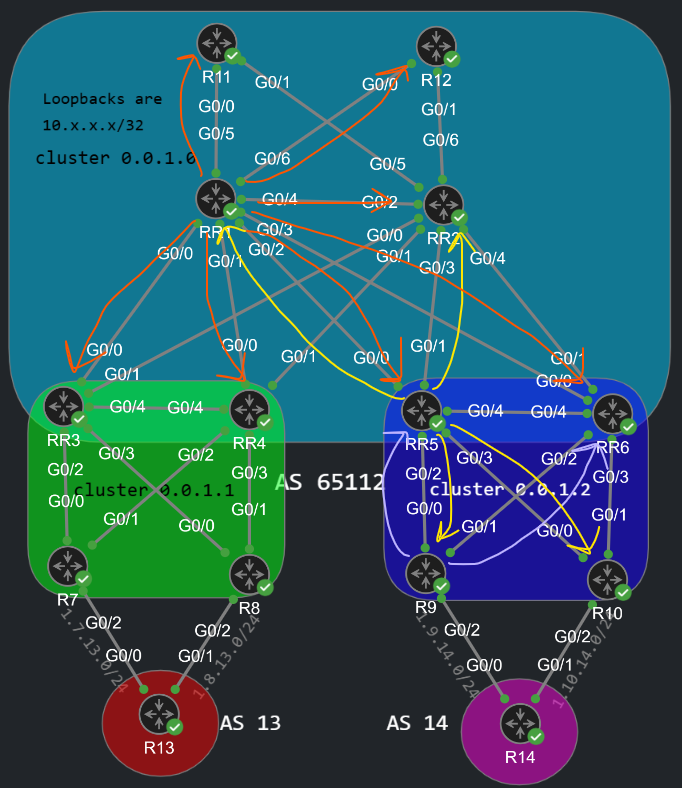

I didn't draw all of the reflections and BGP Updates in the picture above (otherwise it would be a mess) just for three routers (R9, RR5 and RR1) to better understand how the control-plane works in this topology: When R9 receives the NLRI from R14, his eBGP peer, he forwards it to his 2 iBGP peers: RR5 and RR6. R9 is a RR-client of RR5, so RR5 forwards this NLRI to everyone (clients [R9, R10] and non-clients [RR1, RR2]). RR1 gets the NLRI from RR5, his client: he also forwards the NLRI to everyone (clients [RR3, RR4, RR5, RR6, R11, R12] and non-clients [RR2]). When RR3 and RR4 receive the NLRI, they both receive it from non-clients (RR1-RR2) from their point of view, so they only forward it to RR-clients (R7-R8). That's how the control-plane works within AS65112.

Many doesn't know that when RR5 forwards the NLRI to RR1 and RR2 he also gets back a "reflected" BGP Update from both RR1 and RR2:

RR5#debug ip bgp BGP(0): 10.1.1.1 rcv UPDATE about 14.14.14.14/32 -- DENIED due to: CLUSTERLIST contains our own cluster ID;BGP: 10.2.2.2 Route Reflector cluster loop; Received cluster-id 0.0.1.2BGP(0): 10.2.2.2 rcv UPDATE about 14.14.14.14/32 -- DENIED due to: CLUSTERLIST contains our own cluster ID;BGP: 10.1.1.1 Route Reflector cluster loop; Received cluster-id 0.0.1.2

So the Reflectors don't care from whom they got the NLRI in the first place, they reflect it to everyone, also to the original sender. There is no "split-horizon" rule or anything like that for the Reflectors. The NLRI gets dropped of course because of the loop prevention mechanism of the Cluster-list attribute, which we've seen in the previous post. Also don't forget that in this configuration also the 1-tier RRs forward the NLRI to each other: they are non-client of each other, but they receive the NLRI form a client (RR5 and RR6):

RR1#debug ip bgp updates BGP(0): 10.2.2.2 rcv UPDATE about 14.14.14.14/32 -- DENIED due to: reflected from the same cluster;BGP: 10.2.2.2 RR in same cluster. Reflected update droppedBGP(0): 10.2.2.2 rcv UPDATE w/ attr: nexthop 10.9.9.9, origin i, localpref 100, metric 0, originator 10.9.9.9, clusterlist 0.0.1.0 0.0.1.2, merged path 14, AS_PATH , community , extended community , SSA attribute

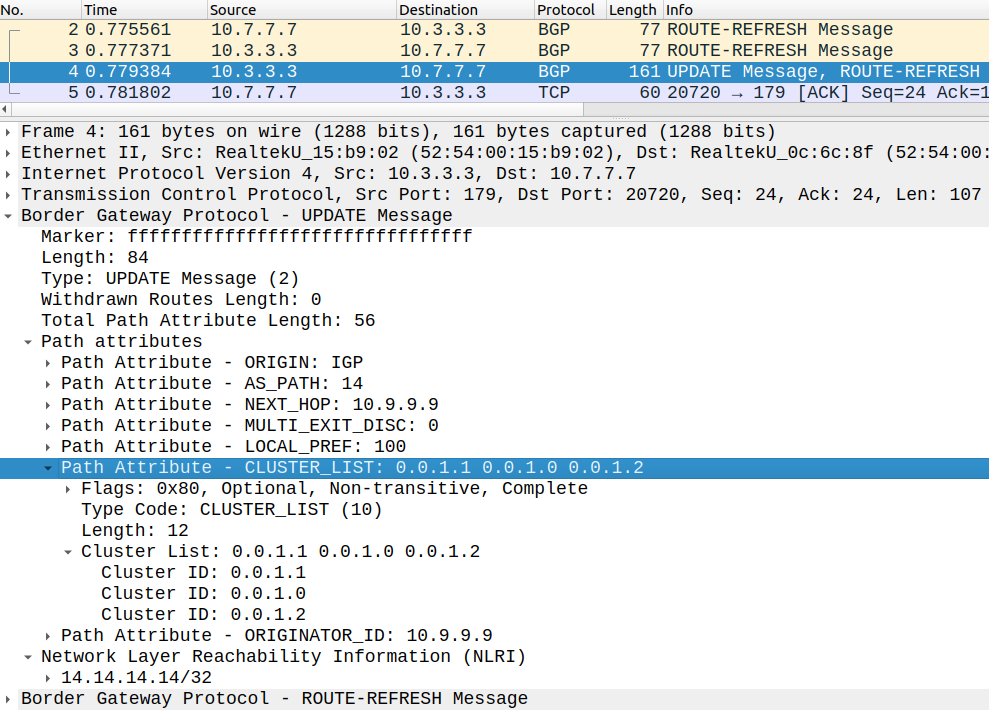

They also drop the reflected Update of each other of course because of the same reason. The 2-tier RRs don't peer with each other, so they don't get a reflected BGP Update directly from any other 2-tier Reflector. Now let's focus on R7 for example: when he gets the NLRI, he gets it with three Cluster-IDs prepended to the Cluster-list attribute:

The NLRI goes through thee clusters: when a RR reflects the BGP Update it prepends his cluster-id to the Cluster-list. First RR5 (and RR6) added his cluster-id (0.0.1.2), then RR1 (and also RR2) (0.0.1.0 0.0.1.2), and finally when RR3 reflected the NLRI he prepended his cluster-od (0.0.1.1 0.0.1.0 0.0.1.2). Another important thing: if for example a RR originates a prefix:

RR2(config)#router bgp 65112RR2(config-router)#address-family ipv4 unicast RR2(config-router-af)#network 10.2.2.2 mask 255.255.255.255

In this case RR2 advertises his own loopback. Does he add the cluster-list attribute to the NLRI? It does not. The cluster-list is only for reflected prefixes, when the RR receives an NLRI from a client or non-client. So RR1 receives the BGP Update without the cluster-list:

RR1>BGP(0): 10.2.2.2 rcvd UPDATE w/ attr: nexthop 10.2.2.2, origin i, localpref 100, metric 0BGP(0): 10.2.2.2 rcvd 10.2.2.2/32BGP(0): Revise route installing 1 of 1 routes for 10.2.2.2/32 -> 10.2.2.2(global) to main IP tableBGP(0): (base) 10.11.11.11 send UPDATE (format) 10.2.2.2/32, next 10.2.2.2, metric 0, path Local

But when RR1 reflects the NLRI (to clients only (!) because he received it from a non-client) he adds the cluster 0.0.1.0 to the cluster-list. RR3 receives the NLRI with a cluster-list attribute:

RR3>BGP(0): 10.1.1.1 rcvd UPDATE w/ attr: nexthop 10.2.2.2, origin i, localpref 100, metric 0, originator 10.2.2.2, clusterlist 0.0.1.0BGP(0): 10.1.1.1 rcvd 10.2.2.2/32

Client-to-client reflections

On the Route Reflectors we can also use the following command:

RR1(config-router)#no bgp client-to-client reflection all

If we disable client-to-client reflection (which is enabled by default of course), NLRIs received from any client won't be reflected to any other client. But it can still be reflected to non-client iBGP peers (and to eBGP peers of course). We use this option if we assume that there is full-mesh peering between all of the client iBGP routers. The moment I issue this command, RR1 withdraws the NLRI from all of his clients:

RR3#BGP(0): 10.1.1.1 rcv UPDATE about 14.14.14.14/32 -- withdrawn

Now RR3 for example only gets this NLRI from RR2, if I did this on RR2 as well, routers in cluster 0.0.1.1 and R11, R12 would lose reachability to AS 14. But RR1 and RR2 would still forward the NLRI to each other because they are non-client of each other:

RR2>BGP(0): 10.1.1.1 rcv UPDATE about 14.14.14.14/32 -- DENIED due to: reflected from the same cluster;

Multiple Cluster-IDs

We can also configure neighbor-specific Cluster-IDs, like this:

RR1(config-router)#neighbor 10.5.5.5 cluster-id 5.5.5.5

By doing this we have multiple Cluster-IDs configured on a single iBGP router: we have the 'global' Cluster-ID (configured with bgp cluster-id) and we have this neighbor-specific Cluster-ID like the one above. What does this command do? First it changes the loop prevention mechanism: now we discard any NLRI with the Cluster-list attribute containing ANY neighbor-specific Cluster-ID, or our global Cluster-ID of course. So if R5 or R6 sent us the NLRI with a Cluster-list attribute containing 5.5.5.5, we would discard that NLRI. Secondly if RR1 reflects any NLRI received from 10.5.5.5 (RR5), RR1 prepends the neighbor-specific Cluster-ID to the Cluster-list. If we didn't have a neighbor-specific Cluster-ID configured for a neighbor we would preprend our global Cluster-ID.

So for example if RR1 reflects this route to RR4 for example, it forwards it with a neighbor-specific Cluster-ID instead of the global one:

RR4#show ip bgp 14.14.14.14/32BGP routing table entry for 14.14.14.14/32, version 4Paths: (2 available, best #1, table default) Advertised to update-groups: 2 Refresh Epoch 4 14 10.9.9.9 (metric 4) from 10.1.1.1 (10.1.1.1) Origin IGP, metric 0, localpref 100, valid, internal, best Originator: 10.9.9.9, Cluster list: 5.5.5.5, 0.0.1.2 rx pathid: 0, tx pathid: 0x0 Refresh Epoch 1 14 10.9.9.9 (metric 4) from 10.2.2.2 (10.2.2.2) Origin IGP, metric 0, localpref 100, valid, internal Originator: 10.9.9.9, Cluster list: 0.0.1.0, 0.0.1.2 rx pathid: 0, tx pathid: 0

Look at the Cluster-list attribute from 10.1.1.1: Cluster list: 5.5.5.5, 0.0.1.2. Of course RR4 receives the prefix from RR2 as well, but RR2 is not configured with the MCID feature so he only prepends his own global Cluster-ID: Cluster list: 0.0.1.0, 0.0.1.2. Let's also configure a Cluster-ID for another client, R11 for example:

RR1(config-router)#neighbor 10.11.11.11 cluster-id 5.5.5.5

Now from the perspective of RR1, RR5 and R11 benlong to the same cluster: 5.5.5.5. This can be useful if want to disable client-to-client reflection for a specific cluster, but not for all clients, like this:

RR1(config-router)#no bgp client-to-client reflection intra-cluster cluster-id 5.5.5.5

What does this command do? If RR1 receives an NLRI from his client RR5 for example, it won't reflect it back to RR5, and also won't reflect it R11, because it is configured with the same neighbor-specific Cluster-ID. We can also verify this with the command:

RR1#show bgp ipv4 unicast cluster-ids Global cluster-id: 0.0.1.0 (configured: 0.0.1.0)BGP client-to-client reflection: Configured Used all (inter-cluster and intra-cluster): ENABLED intra-cluster: ENABLED ENABLEDList of cluster-ids:Cluster-id #-neighbors C2C-rfl-CFG C2C-rfl-USE5.5.5.5 2 DISABLED DISABLED

We can see that the intra-cluster client-to-client reflection is disabled for 5.5.5.5. That's how the MCID feature and intra-cluster client-to-client reflection work in short. We can make it a lot more complex, I just wanted to show the basics. Just like the AS-Path, the Cluster-list attribute can sometimes be quite comlicated: we can configure how do we use it for loop prevention, which Cluster-IDs we prepend for different peers, which neighbors do we reflect the routes to etc.